AS non-invasive prenatal testing becomes more advanced, questions of informed consent, clinical utility and ethical concerns become more complicated for clinicians, and more anxiety-provoking for parents, say experts.

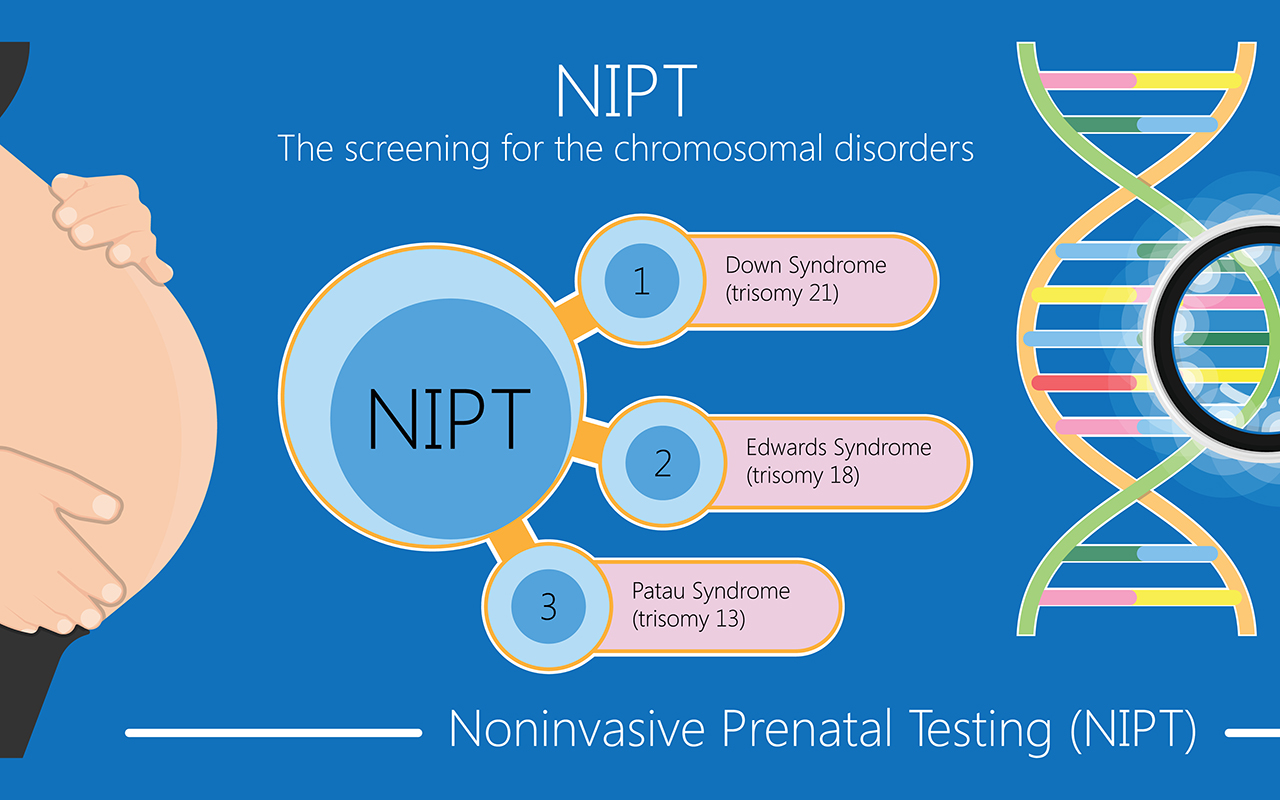

Writing in the MJA (free access for one week), Dr Joseph Thomas, a senior specialist in maternal–fetal medicine at Mater Health Services in Brisbane, and colleagues said that non-invasive prenatal testing (NIPT), introduced in 2010, was “revolutionary, with sensitivity, specificity and detection rates unmatched”.

“NIPT was found to achieve a detection rate for Down syndrome of 99.7%, with a false positive rate of 0.04%,” Thomas and colleagues wrote.

However, as the technology advanced, some NIPT providers started to offer extended panels and low resolution whole genomic sequencing (WGS), including sex chromosome aneuploidies, recurrent microdeletions, subchromosomal deletions and duplications.

“This comes at a cost of a higher false positive rate and lower positive predictive value,” Thomas and colleagues wrote.

“Moreover, the expanded panels and WGS NIPT raise issues of clinical utility and ethical concerns.”

Ethical concerns include:

- the challenges in providing adequate information arising from the complexity of the tests – “From an ethical perspective, however, it is the understanding of information that is important, not merely that a person was given the legally required information”;

- the risk of power imbalances and “normalisation” of testing – “whereby a patient simply agrees because ‘doctor knows best’ and, second, the impression that NIPT is a normal part of care that it would be foolish to reject”;

- anxiety resulting from complex and potentially unnecessary medical decisions – “higher levels of decisional regret among parents whose results identified variation of uncertain significance”;

- the problem of screening for “normality” and genetic reductionism – “just because a genetic anomaly can be identified does not necessarily mean that it would be phenotypically expressed. Similarly, detection of genes associated with adult onset disease does not necessarily equate to disease”; and

- the doctor’s responsibility in determining which NIPT test is clinically indicated – “screening should be recommended or chosen only if there is likely to be a proportionate benefit, and there is no disproportionate burden”.

Thomas and colleagues made the following recommendations:

- Informed consent is required for all NIPT tests, especially in the context of extended panels and WGS NIPT. Clinicians must understand the different abnormalities targeted by extended NIPT panels and be able to assess and communicate the clinical utility of screening in accordance with a particular patient’s needs, desires and circumstances.

- If ordering WGS NIPT, given that there may be significant uncertainty as to the actual phenotypic or functional manifestation of a genetic variation in a particular child, the consent process should include helping to contextualise limitations and risks in the broader context of the human experience of risk and uncertainty.

- Genuine shared decision-making models can empower patient autonomy by helping them to understand the implications of their possible decisions in relation to their values. Moreover, decision tools and algorithms that align a variety of scenarios with personal values can facilitate a high quality informed consent process.

- Higher resolution WGS NIPT should only be used for research purposes until we have robust data regarding its clinical utility.

Also online at the MJA:

Artificial intelligence in medicine: lowering the barriers

RAPID advances in artificial intelligence in ophthalmology are a harbinger of things to come for other fields of medicine, say experts.

Writing in the MJA (free access for one week), Associate Professor Peter van Wijngaarden, Principal Investigator at the Centre for Eye Research Australia, and the University of Melbourne’s Department of Surgery, and colleagues wrote that “ophthalmology is at the vanguard of the development and clinical application of AI”.

“Leading uses of the technology include detecting, classifying and triaging a range of diseases, such as diabetic retinopathy, age-related macular degeneration (AMD), glaucoma, retinopathy of prematurity, and retinal vein occlusion, from clinical images,” van Wijngaarden and colleagues wrote.

“Several algorithms have achieved performance that meets or exceeds that of human experts. Accordingly, in 2018, the United States Food and Drug Administration approved an AI system to detect referable diabetic retinopathy from retinal photographs, the first autonomous diagnostic system to be approved in any field of medicine.”

Despite these and other technological advances, AI systems are not yet in widespread clinical use.

“The training of deep learning systems requires access to large amounts of medical data which has significant implications relating to privacy and data protection,” van Wijngaarden and colleagues wrote.

“In the context of ophthalmology, this is particularly pertinent, as the retinal vasculature may be considered biometric data, making it impossible to completely anonymise retinal photographs. Furthermore, characteristics that are not visible to human examiners, such as age and sex, can now be accurately predicted from a single retinal photograph using deep learning.

“Another challenge to the acceptance of deep learning algorithms in medicine is the difficulty in determining the basis for clinical decisions made by these systems, informally described as the ‘black box’ problem.

“In traditional malpractice cases, a physician may be asked to justify the basis for a particular clinical decision and this is then considered in light of conventional medical practice.

“In comparison, challenges in identifying the basis for a given decision made by AI might pose problems for clinicians whose actions were based on that decision,” they said.

“The extent to which the clinician, as opposed to the technology manufacturer, should be held accountable for harm arising from AI use is a subject of intense debate.”

In Australia, work is under way to minimise these barriers.

“The Australian Government, through the CSIRO and Data61; the Australian Council of Learned Academies; the Australian Academy of Health and Medical Sciences; and specialty groups, such as the Royal Australian and New Zealand College of Radiologists, have made significant efforts to develop frameworks and policies for the effective and ethical development of AI,” van Wijngaarden and colleagues wrote.

“These consultative works have highlighted key priorities, including building a specialist AI workforce, ensuring effective data governance and enabling trust in AI through transparency and appropriate safety standards.

“While these technologies may eventually lead to more efficient, cost-effective and safer health care, they are not a panacea in isolation,” they concluded.

“The successful integration of AI into health systems will need to first consider patient needs, ethical challenges and the performance limits of individual systems.”

Perspective

The value proposition of investigator-initiated clinical trials conducted by networks

Tate et al; doi: 10.5694/mja2.50935 … FREE ACCESS for one week.

Podcast

Professor Christopher Maher, Director of the Institute for Musculoskeletal Health at the University of Sydney, discusses the Medical Research Future Fund’s allocation of money in relation to burden of disease … OPEN ACCESS permanently.

more_vert

more_vert