Individuals diagnosed with transient ischaemic attack (TIA) or ischaemic stroke are at high risk of recurrent vascular events.1,2 Current Australian guidelines recommend continued use of antihypertensive, antithrombotic and lipid-lowering medicines after TIA or ischaemic stroke to reduce the risk of a recurrent ischaemic event, unless contraindications exist.3

In Australia, a national audit is conducted every 2 years to assess the quality of acute stroke care, including use of secondary stroke prevention medicines at the time of hospital discharge.4 However, few studies have examined use of secondary stroke prevention medicines after discharge from hospital.5,6 Of those available, none have assessed changes in use of these medicines at the national level, and it is unclear whether use has increased since the release of Australia’s first stroke management guidelines in 2003. Consequently, the aim of this study was to examine national trends in the use of secondary stroke prevention medicines by TIA and ischaemic stroke survivors to determine whether use has increased over time.

Methods

A retrospective observational study was conducted using data from the Australian Government Department of Veterans’ Affairs (DVA) administrative health claims database. The database contains details of all hospital and pharmaceutical claims subsidised by DVA for Australian veterans and their eligible dependents. At the end of 2009, the treatment population consisted of 263 433 veterans.7

Patients discharged alive after an episode of care for TIA (identified by International classification of diseases, 10th revision, Australian modification [ICD-10-AM] codes G45.0, G45.1, G45.2, G45.8 and G45.9) or ischaemic stroke (code I63) between 1 January 2000 and 31 December 2009 were eligible for inclusion. They were eligible for subsidisation of all health services by the DVA. We assessed consecutive hospital claims after each TIA or ischaemic stroke claim up to 30 June 2010, as patients may have multiple claims recorded for treatment of the same event.8 Data rules established in consultation with clinicians8 were used to link stroke-related separations and determine final discharge dates.

The proportion of patients using secondary stroke prevention medicines was determined monthly, commencing in January 2003. Each month, the cohort included all patients aged ≥ 65 years who had had a previous episode of care for TIA or ischaemic stroke recorded between 1 January 2000 and the month under study. Patients with a previous episode of care for both TIA and ischaemic stroke were eligible for inclusion in both cohorts. Patients were included each month until either their death or the end of the study period.

To determine the number of patients dispensed recommended medicines each month, all claims for antihypertensives (identified by the World Health Organization Anatomical Therapeutic Chemical classification codes C02, C03, C07, C08 and C09 [excluding C08EX02, perhexiline]), antithrombotics (code B01A [excluding B01AD, thrombolytics]) and lipid-lowering medicines (code C10) between 1 July 2002 (to include medications taken at, but dispensed before, study commencement) and 31 December 2009 were extracted. As dosage information is not available from the database, prescription durations were used as a measure of duration of use of each medicine. The prescription durations were calculated from the DVA pharmaceutical claims dataset and represent the time in which 75% of prescriptions for an individual item were refilled. Use of each of the three classes of medicine, combined treatment with antihypertensive and antithrombotic therapy, and treatment with a combination of all three classes of medicine were determined for all patients still living each month.

Prevalence of use each month (January 2003 to December 2009) was age and sex standardised using the DVA population in January 2003 to account for changes in population characteristics over time. After standardisation, Poisson regression models with generalised estimating equations were used to test for trends in medicine use, using an autoregressive working correlation matrix to adjust for serial correlation. The regression models compared the rate of medicine use in 1 year with the rate in the previous year to test for linear trends between 2003 and 2009. Separate models were used for each treatment and diagnosis combination. All analyses were performed using SAS version 9.4 (SAS Institute).

This study was approved by the University of South Australia and DVA human research ethics committees.

Results

A total of 19 019 patients were included in our analysis. Of these, 403 patients (2.1%) were included in both disease cohorts for at least 1 month during the study period. The characteristics of those included at the start and end of the study are described in Box 1.

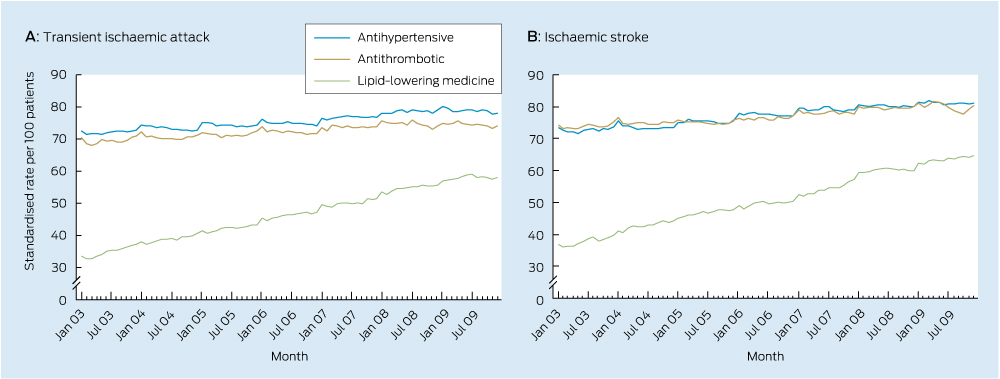

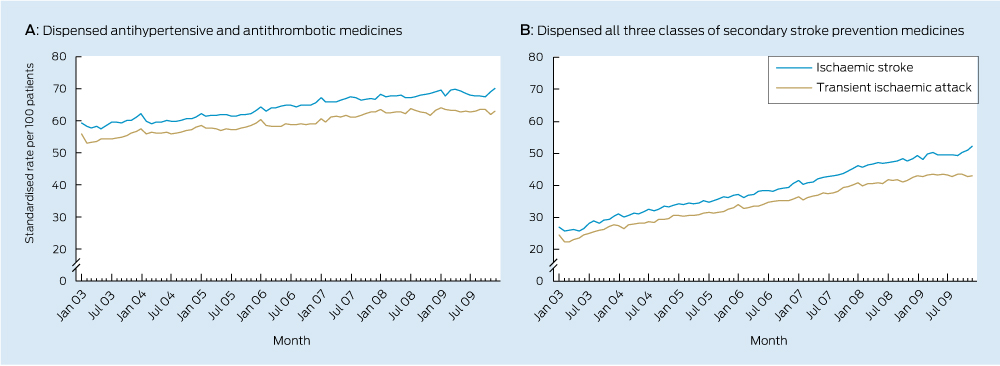

Significant increases in use of each class of secondary stroke prevention medicine occurred during the study period (Box 2, Box 3). There was also an increase in the total number of guideline-recommended medicines taken by survivors (Box 2, Box 4) with a near doubling in prevalence of the combined use of all three recommended medicines.

Discussion

This is the first Australian study to examine national trends in the use of secondary stroke prevention medicines among patients with a previous TIA or ischaemic stroke. The median duration of time patients had spent in the cohort was 1.3 years in January 2003 and 3.4 years in December 2009, meaning trends are reflective of use among the prevalent population, rather than among patients with a recent event. Increased use observed in this study suggests practice is moving towards guideline recommendations. Despite this, only half of the population were dispensed medicines from all three recommended classes in December 2009, suggesting there may be opportunity to further increase use of these medicines among the older population.

These findings are consistent with results from international studies conducted within the general practice population over a similar period.9,10 A large study from the United Kingdom showed use of antihypertensives in the year after a first stroke increased from approximately 50% to 70% between 1999 and 2008, antiplatelet use increased from 60% to 75%, and use of lipid-lowering therapy increased from 15% to 80%.10 Large increases in use of lipid-lowering therapy were also shown in a Danish population-based study, with use among ischaemic stroke survivors increasing from 40% to 65% between 2004 and 2010.11

Along with the release and dissemination of national stroke guidelines (which were regularly updated during the study period), other quality use of medicines initiatives may have contributed to the increased use observed in this study. Stroke-specific sections were included in yearly editions of Australia’s national formulary (the Australian medicines handbook) and updated versions of Therapeutic guidelines (neurology). Evidence-based stroke prevention and management was also reviewed in Australian prescriber12 and targeted by National Prescribing Service initiatives.13 The study population and their general practitioners would have received information about antithrombotics through the Veterans’ Medicines Advice and Therapeutics Education Services (Veterans’ MATES) program during the study period. Additional factors likely to have had an impact on the use of lipid-lowering medicines include the publication of a landmark trial14 and changes to eligibility criteria for subsidisation of these medicines through Australia’s national pharmaceutical subsidy scheme15 during 2006.

Factors influencing the use of secondary stroke prevention medicines in older populations are complex, and may be related to lack of awareness of guideline recommendations, prescriber-related factors (such as concern about the lack of evidence to guide secondary prevention among older patients and potential harms of treatment) or patients’ preferences.16 Although there may be room for improvement in use of these medicines, our results reflect use among all survivors, as we lacked clinical information necessary to exclude those with treatment contraindications or previous adverse reactions. We do not expect all patients could be dispensed each medicine, as some older patients may be unsuitable for treatment on entering the cohort. For others, treatment priorities may change over time,17 and medicines for secondary prevention (such as lipid-lowering therapy) may be withdrawn during the late stages of life, or in those with severe physical impairment or cognitive deficit.18 The number of older patients ineligible for treatment may be significant. In a study assessing antithrombotic use by older patients with acute ischaemic stroke, more than one-third were excluded from the analysis, owing to contraindications or refusal of treatment at discharge.19 Trends in antithrombotic use observed in our study may be further underestimated, as aspirin can be purchased without a prescription in Australia (although patients included in this study had access to subsidised aspirin via prescription).

This study used hospital claims data to determine whether patients had a TIA or ischaemic stroke. To minimise selection bias, patients were selected using primary diagnosis codes and those with an unspecified stroke (ICD-10-AM code I64) were not included. There is high adherence to Australian standards for ICD-10-AM coding,20 and 95% of patients with a primary diagnosis code for stroke were correctly coded in a recent Australian audit.21

We expect that use of recommended medicines by patients included in this study is indicative of use by older Australians previously hospitalised for TIA or ischaemic stroke. Age-specific comparisons show veterans without a service-related disability and the general Australian population have similar use of pharmaceuticals, hospital services and GP visits.22 However, changes in medicine use observed in this study may not be generalisable to patients managed solely in the community setting, and without assessment of clinical records it is not known if treatment targets were attained.

The increased use of secondary stroke prevention medicines shown between 2003 and 2009 in this large cohort of older Australians with a previous TIA or ischaemic stroke is consistent with Australian stroke guideline recommendations and initiatives to support quality use of medicines during the study period.

1 Characteristics of patients who were included at the start and end of the study

|

Characteristics, by disease cohort

|

Jan 2003

|

Dec 2009

|

|

|

Transient ischaemic attack

|

|

|

|

No. of patients

|

2765

|

5242

|

|

Age (years), median (IQR)

|

81.0 (78.3–84.4)

|

86.8 (84.1–89.5)

|

|

No. of men (%)

|

1761 (63.7%)

|

2716 (51.8%)

|

|

Time in cohort (years), median (IQR)

|

1.3 (0.6–2.0)

|

3.5 (1.6–6.1)

|

|

Ischaemic stroke

|

|

|

|

No. of patients

|

2493

|

4302

|

|

Age (years), median (IQR)

|

80.9 (78.0–84.2)

|

86.6 (84.1–89.2)

|

|

No. of men (%)

|

1609 (64.5%)

|

2376 (55.2%)

|

|

Time in cohort (years), median (IQR)

|

1.3 (0.6–2.1)

|

3.3 (1.5–5.8)

|

2 Changes in use of secondary stroke prevention medicines by transient ischaemic attack and ischaemic stroke survivors between 2003 and 2009

| |

Standardised monthly rate of use (per 100 patients)

|

|

|

|

|

Medicines, by disease cohort

|

Jan 2003

|

Dec 2009

|

Standardised rate ratio (95% CI)

|

Average annual % change

|

P

|

|

|

Transient ischaemic attack

|

|

|

|

|

|

|

Antihypertensive

|

72.5

|

78.2

|

1.016 (1.015–1.016)

|

+1.6%

|

< 0.001

|

|

Antithrombotic

|

70.4

|

74.0

|

1.013 (1.011–1.014)

|

+1.3%

|

< 0.001

|

|

Lipid-lowering

|

33.5

|

58.0

|

1.087 (1.084–1.091)

|

+8.7%

|

< 0.001

|

|

Antihypertensive + antithrombotic

|

55.9

|

63.0

|

1.025 (1.023–1.027)

|

+2.5%

|

< 0.001

|

|

Antihypertensive + antithrombotic + lipid-lowering

|

24.4

|

43.0

|

1.094 (1.088–1.101)

|

+9.4%

|

< 0.001

|

|

Ischaemic stroke

|

|

|

|

|

|

|

Antihypertensive

|

73.3

|

81.1

|

1.019 (1.019–1.020)

|

+1.9%

|

< 0.001

|

|

Antithrombotic

|

74.2

|

80.4

|

1.014 (1.013–1.015)

|

+1.4%

|

< 0.001

|

|

Lipid-lowering

|

36.8

|

64.8

|

1.088 (1.087–1.090)

|

+8.8%

|

< 0.001

|

|

Antihypertensive + antithrombotic

|

59.4

|

70.2

|

1.027 (1.025–1.028)

|

+2.7%

|

< 0.001

|

|

Antihypertensive + antithrombotic + lipid-lowering

|

26.9

|

52.3

|

1.102 (1.098–1.106)

|

+10.2%

|

< 0.001

|

3 Trends in monthly use of secondary stroke prevention medicines by patients previously hospitalised with a transient ischaemic attack or ischaemic stroke

4 Trends in monthly use of combination therapy by patients previously hospitalised with a transient ischaemic attack or ischaemic stroke

more_vert

more_vert