Antimicrobial resistance is a serious threat to global health1–3 and there are few new antimicrobials in the pipeline, particularly for gram-negative bacteria.4 By ensuring rational prescribing, antimicrobial stewardship (AMS) plays a critical role in reducing the development of microbial resistance. Since the 2011 call for action by the World Health Organization,3 AMS activities have been increasing worldwide.

While guidelines exist for implementing hospital AMS programs,5,6 there is little information about what is being done in paediatric hospitals.7 We aimed to identify current AMS resources and activities for children in hospitals throughout Australasia, to identify gaps in services, to identify gaps in services.

Methods

Tertiary paediatric hospitals (children’s hospitals and combined adult and paediatric hospitals that offer tertiary paediatric care) were surveyed in every state and territory of Australia and the North and South Islands of New Zealand.

The survey was adapted with permission from a Victorian AMS survey that was developed in 2012 by the Victorian Department of Health and Melbourne Health’s AMS Research Group.8 Questions were adapted for the paediatric context and administered via a web-based survey by the Australian and New Zealand Paediatric Infectious Diseases Group – Australasian Stewardship of Antimicrobials in Paediatrics (ANZPID-ASAP) group. In June 2013, the survey was sent to a paediatric infectious diseases (ID) physician, paediatrician or pharmacist responsible for AMS at each hospital.

Data collected included: hospital demographics (paediatric and adult bed numbers, paediatric specialist services); types of AMS resources (a paediatric AMS program [a multidisciplinary program within the structure and governance of the hospital responsible for oversight of and strategies to improve antimicrobial prescribing], antimicrobial prescribing guidelines, ID personnel specifically funded for AMS, AMS pharmacist, other personnel); and AMS activities (education, approval for restricted antimicrobials, audit of antimicrobial use, monitoring of antimicrobial resistance, selective susceptibility reporting and point-of-care interventions). Unpaired t tests were used to compare means and SEMs.

Ethics approval was not required as no patient data were included.

Results

The survey was completed by 14 hospitals: seven children’s hospitals, six hospitals that had a large majority of adults, and one hospital that had a majority of children plus a maternity unit. Paediatric bed numbers ranged from 40 to 300. At 12 hospitals, the survey was completed by paediatric ID physicians; at one it was completed by a paediatrician on the hospital AMS team, and at one it was completed by an AMS pharmacist.

Personnel resources

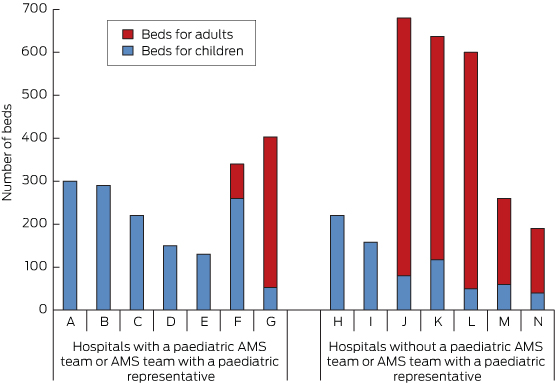

Nine hospitals had AMS programs (in two there was no paediatric representation), and one further AMS program was in development. Five hospitals had a dedicated paediatric AMS team, and only one of the hospitals with a large majority of adults had a paediatric representative on the hospital AMS team (Box 1).

Hospitals with a paediatric AMS team or AMS team with a paediatric representative had a higher number of paediatric beds than those without (mean, 200 [SD, 92] v mean, 104 [SD, 66]; P = 0.04; 95% CI, 5–191). They also had a higher number of paediatric specialist services (mean, 8 [SD, 1.4] v mean, 4 [SD, 2.4]; P = 0.009; 95% CI, 1.0–5.6).

However, having paediatric specialist services did not necessarily mean a hospital had an AMS program with paediatric representation; for example, seven of the 12 hospitals with a neonatal unit did not have one. Throughout the rest of the survey, paediatric AMS activities were generally associated with having a dedicated paediatric AMS team.

While 11 hospitals had funding for an AMS pharmacist, only eight had a paediatric component (Box 2), and only four of these had committed ongoing funding for a permanent paediatric AMS pharmacist. Only two hospitals had any funding for a paediatric ID physician for AMS — both were part-time and one was about to cease (Box 2). Thus in total there was a single hospital out of 14 tertiary paediatric hospitals across Australia and New Zealand that had an ongoing part-time (half a day per week) paediatric ID position dedicated to AMS, amounting to 0.1 equivalent full-time (EFT).

Guidelines

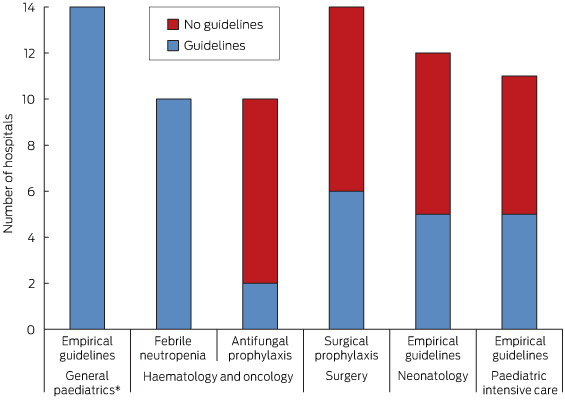

All hospitals had empirical antimicrobial prescribing guidelines and all of those with a haematology and oncology service had guidelines for febrile neutropenia. However, fewer than half of the hospitals had guidelines for antifungal prophylaxis, surgical prophylaxis, neonatology or paediatric intensive care (Box 3).

Review of antimicrobial prescribing

Some point-of-care interventions relating to antimicrobial prescribing were carried out in 12 hospitals, including empirical choice, dose optimisation, de-escalation based on microbiology test results and intravenous to oral switch. At four hospitals, the interventions were used for most antimicrobial prescriptions; for three they were only used in units that have high prescribing rates (eg, haematology and oncology); and for five they were only used in consultation with an ID physician or for restricted antibiotics. For the four hospitals where point-of-care interventions were used for most antimicrobial prescriptions, feedback was provided by the AMS team; for the remainder it was provided by the ID team.

Only two hospitals had automatic stop orders, and these were for restricted antimicrobials.

All hospitals had restricted antimicrobials but only four hospitals had an electronic approval system. At 13 hospitals, approval for restricted drugs was done by ID physicians.

Monitoring

Seven hospitals monitored antimicrobial usage by unit use (eg, number of vials), cost or both. Auditing methods varied widely but were mainly ad hoc: 10 hospitals had done at least one hospital-wide audit including assessment of appropriateness and one did occasional ward audits, but three hospitals had not done any audits. Results of audits were mostly fed back in an untargeted way through grand rounds or clinical quality meetings; only five hospitals provided feedback at departmental meetings and two provided the results to the relevant head of department or consultant. Of the 11 hospitals that did any audits, two did not feed the results back at all.

All hospital microbiology laboratories selectively reported antimicrobial susceptibility results (“cascade reporting”). Only five, however, monitored and regularly reported hospital-wide sensitivity patterns, and a further three reported on selected bacteria only.

Education

There was a paucity of education about AMS in general: no hospitals had education for senior medical staff, eight had education for junior medical staff, five for pharmacists, one for nurses, and four had no education for any staff. One hospital covered AMS at hospital orientation.

Barriers to antimicrobial stewardship

In the opinions of paediatric ID physicians and pharmacists, there was a wide range of reasons for slow implementation of AMS. The commonest perceived barriers to successful AMS were lack of education (11 hospitals), lack of dedicated pharmacy staff (eight) and lack of dedicated medical staff (seven). Other perceived barriers included lack of willingness to change, lack of leadership by executive and senior clinicians, lack of enforcement and transient junior staff.

Discussion

This is the first comprehensive survey of AMS resources for hospitalised children as measured by the inclusion of every children’s hospital in Australia and New Zealand. The survey responses are likely to be highly reliable as they were completed by an ID physician, AMS paediatrician or AMS pharmacist at each hospital — the most qualified local staff.

The results represent large hospitals with paediatric patients. There are many other hospitals with paediatric patients across both countries and, as these would predominantly have fewer children, paediatric AMS resources are likely to be even more scarce.

To our knowledge, only one other study of paediatric AMS programs in hospitals has been conducted. This was a larger study that was done in the United States, but it had only a 60% response rate and did not include all children’s hospitals.7

Personnel resources

In our study, most hospitals had an AMS program, either in existence or in development, which compares favourably with the 33% reported from the US study.7 This may reflect the fact that AMS has been a requirement of national hospital accreditation in Australia since 1 January 2013.

Hospitals included in our study that had a majority of children and those that offered more specialist services were more likely to have a paediatric AMS team or representative. While this is not surprising, it raises the question of how hospitals with fewer children might manage.

One possibility is to link in with the hospital’s adult AMS services. However, having a high number of adult patients did not necessarily equate to having an adult AMS team (data not shown), and differences between children and adults (in terms of antimicrobial use, measurement of antimicrobial use and patient outcomes) would make it difficult to use combined resources.

An alternative model is to have a network of paediatric AMS services between hospitals to share resources. The ANZPID-ASAP group is one such network of paediatric ID physicians and AMS pharmacists with representation from every state and territory in Australia and from New Zealand. Resources such as AMS guidelines, surveys and data on antimicrobial use can and have been shared among the group.

Members of the ANZPID-ASAP group also provide information and consultations via email for practical antimicrobial questions. This model could be replicated at a local level. It is less straightforward to share personnel resources such as AMS pharmacists, although this may be possible in a geographically smaller area such as a city that has several hospitals.

Paediatric representation on adult AMS networks for the acquisition and dissemination of information is also important. One such network is the Australian Commission on Safety and Quality in Health Care Antimicrobial Stewardship Jurisdictional Network.

The total of 0.1 EFT ongoing paediatric ID physician dedicated to AMS in the whole of Australasia is concerning. Even the proportion of hospitals with any paediatric AMS pharmacist time was only just over half. In the US study, almost half of AMS programs had greater than 0.25 EFT of paediatric ID physician time, but up to 40% had none and 40% had no paediatric AMS pharmacist.7

Guidelines

It is reassuring that every hospital had empirical guidelines for children attending the emergency department, as emergency departments have a high turnover of junior medical staff and having guidelines to direct empirical antimicrobial decision making for children is important.9

Conversely, the lack of guidelines in specialist areas is concerning. This may reflect lack of evidence or resources, or complacency in some instances. In some areas (eg, antifungal prophylaxis for haematology and oncology patients), there is little specific evidence in children.10

Lack of evidence, however, should not be an impediment to consensus guidelines. In other areas (eg, surgical prophylaxis), adult guidelines can be used. Guidelines should always be adapted to the specific population, taking into account age, disease burden and resistance patterns of local organisms. Guidelines help to standardise prescribing and represent an area for improvement.

Review of antimicrobial prescribing

The hospitals that were able to do most point-of-care interventions were those where the AMS team was heavily involved (data not shown), indicating that widespread AMS activities are dependent on time and resources.

The low rate of automatic stop orders is a potential area for intervention. AMS programs in hospitals are likely to have a greater impact on strategies for stopping antimicrobials than on those for starting antimicrobials. By having AMS systems and processes built into everyday workflow for patient care (eg, guidelines, protocols, routine data collection on antibiotic use), the need for individual patients to have consultations with ID, microbiology or AMS teams decreases.

While all hospitals had a list of restricted drugs, a minority had an electronic approval system. Phone approval systems are easier to circumvent intentionally or unintentionally, so the drugs that are most important to preserve may be missed. It has been suggested that prospective audit of and feedback on the use of selected drugs would be more effective than restriction and approval.6 However, audits are time consuming and require dedicated AMS personnel.

Monitoring

Only half of hospitals included in our survey routinely monitored antimicrobial use and fewer than half monitored hospital-wide organism resistance patterns (antibiograms). These represent two of the few measurable outcomes for paediatric patients.11 Half of the hospitals monitored unit use and/or cost — similar to the 55% in the US study.7

Because dosing for children is weight-based, unit use and cost do not provide a useful snapshot of antimicrobial use, although they can be used to monitor trends. One innovative strategy that has been proposed is adapting methods used for adults — for example, using average weight bands for different paediatric age groups12 — although such strategies have not been validated.

In the absence of electronic systems that enable the number of days of antimicrobial use to be recorded, audits in the form of antimicrobial point prevalence surveys remain the mainstay of paediatric monitoring. In our study, 10 hospitals had done at least one hospital-wide audit; of those 10, eight were involved in an international point prevalence survey during 2012, undertaken in Australia by the ANZPID-ASAP group.13,14 To be useful, such surveys (which are labour intensive) need to be repeated.

Hospital antibiograms are helpful for drafting guidelines that account for local resistance patterns, and for detecting significant changes over time, to inform empirical antimicrobial choices.15 It is possible that hospitals will eventually move away from antibiograms, and instead adopt strategies such as mathematical modelling and likelihood of inadequate therapy,16 but antibiograms are the best choice at present.

Education

Lack of AMS education for senior medical staff in the hospitals we surveyed likely reflects a lack of hospital-based education programs for this group in general. Since senior medical staff are crucial in the support of AMS principles, this area needs to be addressed. While junior medical staff received the most education, this was often under the umbrella of other teaching that included antibiotics (eg, management of urinary tract infections) rather than specific teaching about AMS. Other clinical staff (pharmacists and nurses) received even less education, failing to support the notion of AMS as multidisciplinary. In the US study, 64% of AMS programs had education as a specific component.7 For all but one hospital in our study, AMS was not considered important enough to be included in hospital orientation.

Barriers to antimicrobial stewardship

While the US study identified perceived loss of prescribing autonomy and lack of hospital administrative awareness about the importance of AMS as barriers, lack of resources ranked most important (70% of respondents).7 Two recent Australian adult AMS surveys found that lack of education, lack of pharmacy resources and lack of ID and microbiology resources were in the top three perceived barriers to AMS.8,17

In our study, the main barriers to effective AMS that were identified by the paediatric ID physicians and AMS pharmacists of Australia and New Zealand who completed the survey were lack of education and lack of personnel (dedicated pharmacy and medical staff). The latter may reflect the difficulty in showing cost benefits in paediatric health care compared with adult health care: for example, incidence of Clostridium difficile infection in paediatrics is not a useful outcome measure. Other measures, such as reduced length of hospital stay and intensive care unit stay may be more useful in children,15 but these reductions cannot be realised without resources.

Lack of education and lack of personnel are the two main areas that should be targeted in efforts to keep hospital executives informed about best practice in AMS. They should be seen as the focus of quality improvement activities in the care of children in hospital.

Conclusions

Australasian children’s hospitals have implemented some AMS activities, such as audits of antimicrobial use and monitoring of antimicrobial resistance, but most lack human resources. There was consensus among the staff who completed our survey that lack of education and personnel are major barriers to effective AMS. These must be addressed to improve antimicrobial use in hospitalised children.

Members of the Australian and New Zealand Paediatric Infectious Diseases Group – Australasian Stewardship of Antimicrobials in Paediatrics (ANZPID-ASAP) group

Penelope Bryant (Chair) (Royal Children’s Hospital, Melbourne), David Andresen (Children’s Hospital at Westmead, Sydney), Minyon Avent (Mater Children’s Hospital, Brisbane), Sean Beggs (Royal Hobart Hospital, Hobart), Chris Blyth (Princess Margaret Hospital for Children, Perth), Asha Bowen (Menzies School of Health Research, Darwin), Celia Cooper (Women’s and Children’s Hospital, Adelaide), Nigel Curtis (Royal Children’s Hospital, Melbourne), Julia Clark (Royal Children’s Hospital, Brisbane), Andrew Daley (Royal Children’s Hospital, Melbourne), Jacky Dobson (Canberra Hospital, Canberra), Gabrielle Haeusler (Monash Children’s Hospital, Melbourne), David Isaacs (Children’s Hospital at Westmead, Sydney), Brendan McMullan (Sydney Children’s Hospital, Sydney), Pam Palasanthiran (Sydney Children’s Hospital, Sydney), Tom Snelling (Princess Margaret Hospital for Children, Perth), Mike Starr (Royal Children’s Hospital, Melbourne), Lesley Voss (Starship Children’s Health, Auckland).

1 Paediatric and adult bed numbers at hospitals with and without a paediatric antimicrobial stewardship (AMS) team or AMS team with a paediatric representative in June 2013

2 Numbers of hospitals with antimicrobial stewardship (AMS) pharmacists and/or AMS infectious diseases physicians in June 2013 (n = 14)

| |

Number

|

|

|

AMS pharmacists

|

|

|

Paediatric full-time

|

2

|

|

Paediatric part-time

|

6

|

|

Adult only

|

1

|

|

Paediatric position not filled, no ongoing funding

|

2

|

|

None

|

3

|

|

AMS infectious diseases physicians

|

|

|

Paediatric full-time

|

0

|

|

Paediatric part-time

|

1

|

|

Adult only

|

2

|

|

Paediatric position not filled, no ongoing funding

|

1

|

|

None

|

10

|

3 Numbers of hospitals with and without antimicrobial prescribing guidelines for different services (n = 14)

more_vert

more_vert